See everything. Experience everything.

Add dynamic, autonomous camera framing and room automation to high-impact spaces.

Through Speaker Spotlight technology the active speaker’s voice drives camera framing without the need for presets, while voice activity detection protects against false audio triggers. With Presenter Spotlight technology advanced computer vision drives full-body presenter tracking.

COMING SOON

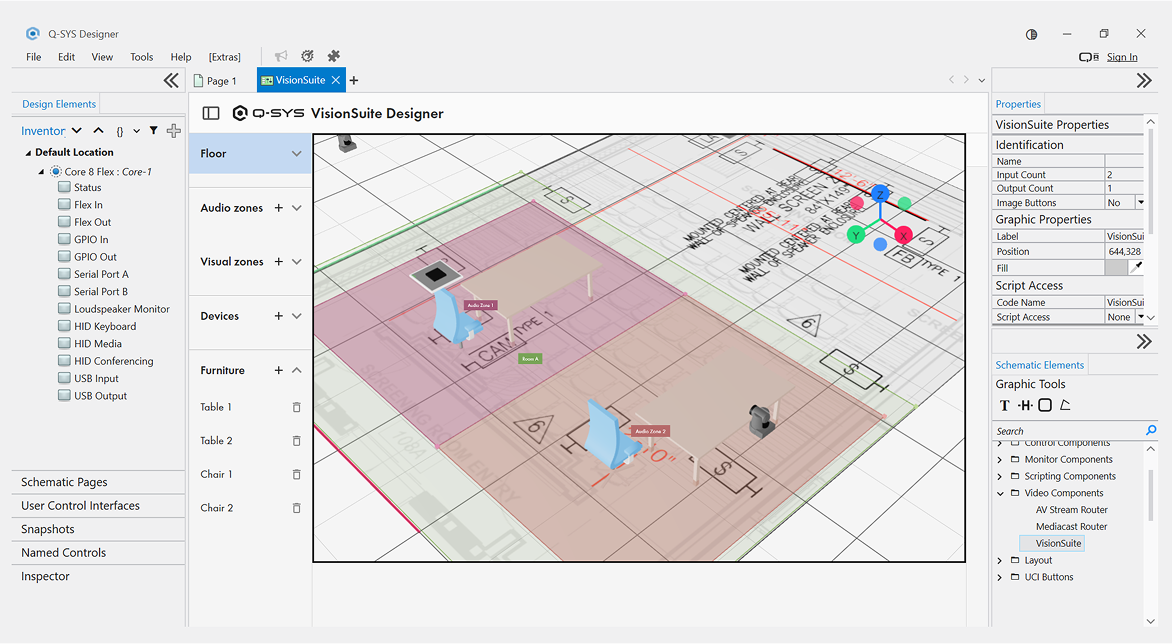

Design, configure, calibrate, and commission using this easy-to-use native tool within Q-SYS Designer.

COMING SOON

Introducing the Q-SYS VSA-100 VisionSuite AI Accelerator. Pair with Q-SYS cameras, audio accessories, and Q-SYS processing to integrate with the leading UC platforms.

Responsive camera switching and framing based on in-room attendees voices and location.

COMING SOON

Feel free to rearrange the room. Camera positioning is dynamic and triggered by audio positioning data.

COMING SOON

Camera positioning triggered by people intentionally speaking, not ambient room noise (like loud typing or bag rustling.)

![]()

Dynamic, cinematic camera framing for the presenter based on advanced computer vision.

Autonomous presenter tracking based on full-body recognition, enabling fluid and predictive camera motion and vision-driven automation.

Attendees experience a wow factor from vision or audio triggered automations. Shift lighting, toggle displays, activate mics, audio, and control 3rd party devices.

Discover the latest advancements and use cases.

Create meeting equity for in-room and remote participants.

Upscale with broadcast and performative capabilities.

Balance experiences for the lecture, whiteboard, and student participation.

Free teachers from being tethered to a podium and enable engagement with everyone in-room and remote.

Want to see Q-SYS VisionSuite in action? Q-SYS experts will demonstrate Speaker and Presenter Spotlight technologies and how to get the most from your space.